Lightweight Adapter Tuning for Multilingual Speech Translation

Annual Meeting of the Association for Computational Linguistics (ACL), 2021

PDF Code Poster Slides Video Publisher

Abstract

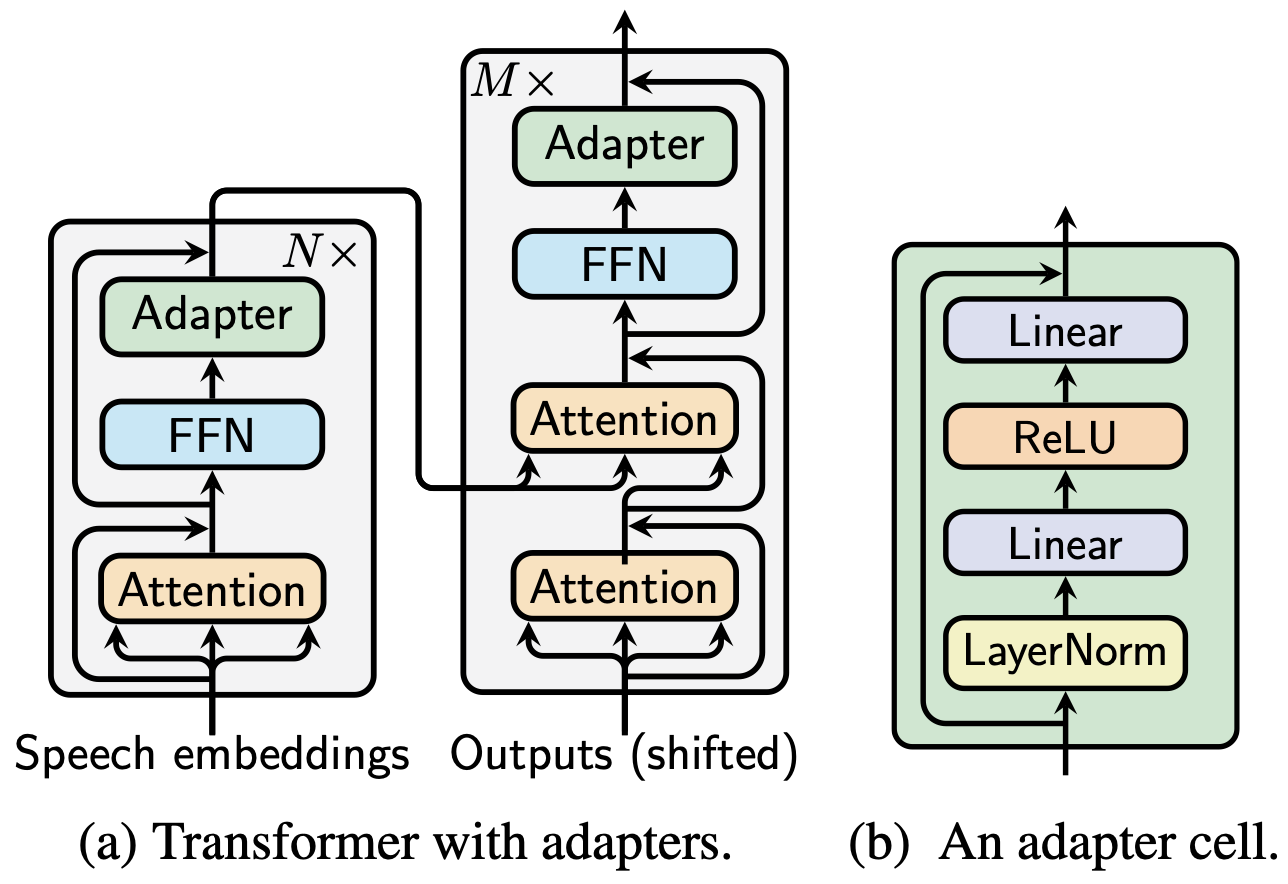

Adapter modules were recently introduced as an efficient alternative to fine-tuning in NLP. Adapter tuning consists in freezing pre-trained parameters of a model and injecting lightweight modules between layers, resulting in the addition of only a small number of task-specific trainable parameters. While adapter tuning was investigated for multilingual neural machine translation, this paper proposes a comprehensive analysis of adapters for multilingual speech translation (ST). Starting from different pre-trained models (a multilingual ST trained on parallel data or a multilingual BART (mBART) trained on non parallel multilingual data), we show that adapters can be used to: (a) efficiently specialize ST to specific language pairs with a low extra cost in terms of parameters, and (b) transfer from an automatic speech recognition (ASR) task and an mBART pre-trained model to a multilingual ST task. Experiments show that adapter tuning offer competitive results to full fine-tuning, while being much more parameter-efficient.

Citation

@inproceedings{le2021lightweight,

author = {Hang Le and

Juan Miguel Pino and

Changhan Wang and

Jiatao Gu and

Didier Schwab and

Laurent Besacier},

title = {Lightweight Adapter Tuning for Multilingual Speech Translation},

booktitle = {Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics (ACL)},

pages = {817--824},

publisher = {Association for Computational Linguistics},

year = {2021}

}