Task Agnostic and Task Specific Self-Supervised Learning from Speech with LeBenchmark

Neural Information Processing Systems (NeurIPS), 2021

PDF Code Publisher

Abstract

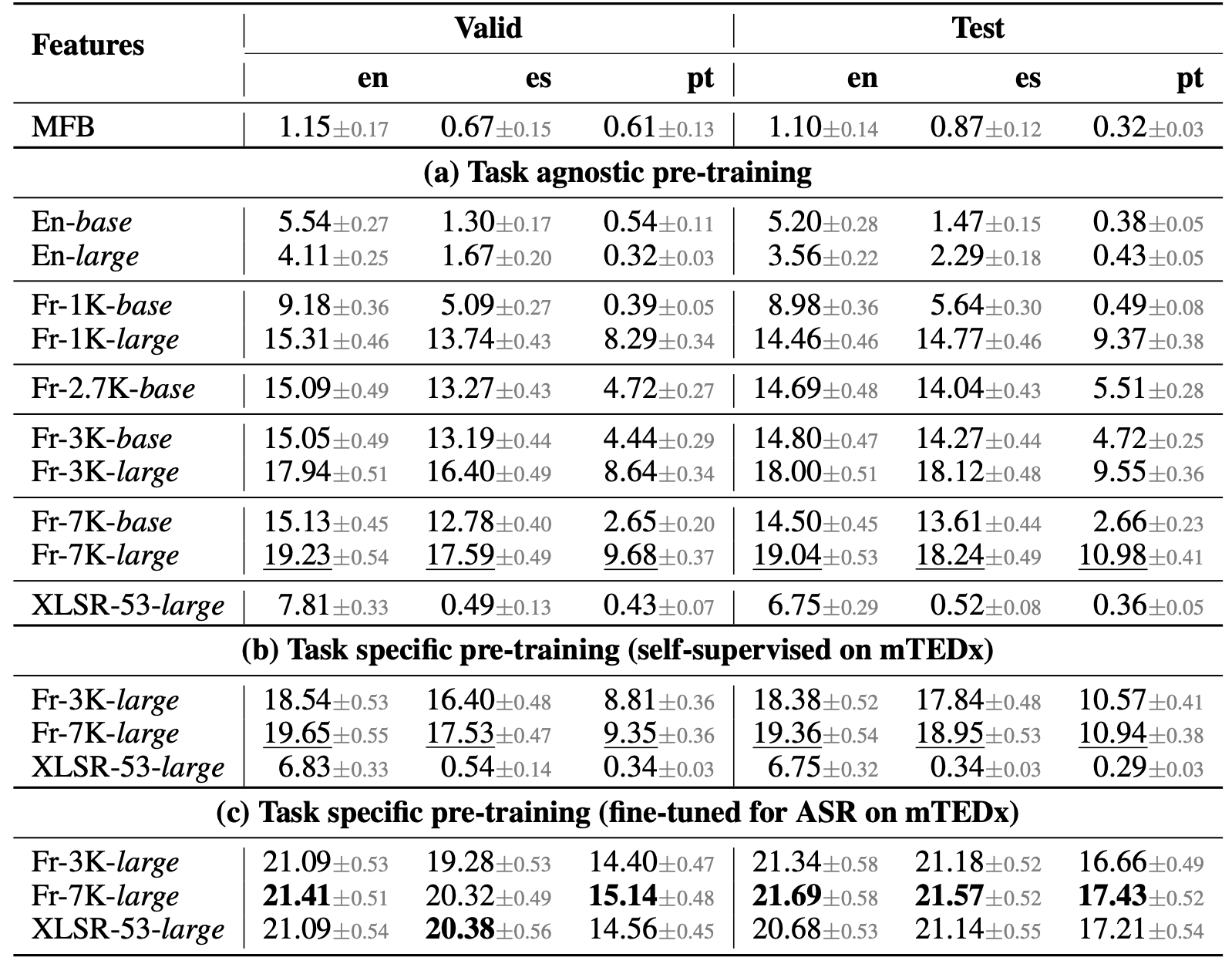

Self-Supervised Learning (SSL) has yielded remarkable improvements in many different domains including computer vision, natural language processing and speech processing by leveraging large amounts of unlabeled data. In the specific context of speech, however, and despite promising results, there exists a clear lack of standardization in the evaluation process for comprehensive comparisons of these models. This issue gets even worse with the investigation of SSL approaches for other languages than English. We present LeBenchmark, an open-source and reproducible framework for assessing SSL from French speech data. It includes documented, large-scale and heterogeneous corpora, seven pretrained SSL wav2vec 2.0 models shared with the community, and a clear evaluation protocol made of four downstream tasks along with their scoring scripts: automatic speech recogni- tion, spoken language understanding, automatic speech translation and automatic emotion recognition. For the first time, SSL models are analyzed and compared on the latter domains both from a task-agnostic (i.e. frozen) and task-specific (i.e. fine-tuned w.r.t the downstream task) perspectives. We report state-of-the-art performance on most considered French tasks and provide a readable evaluation set-up for the development of future SSL models for speech processing.

Citation

@inproceedings{evain2021task,

author = {Sol{\`{e}}ne Evain and

Ha Nguyen and

Hang Le and

Marcely Zanon Boito and

Salima Mdhaffar and

Sina Alisamir and

Ziyi Tong and

Natalia A. Tomashenko and

Marco Dinarelli and

Titouan Parcollet and

Alexandre Allauzen and

Yannick Est{\`{e}}ve and

Benjamin Lecouteux and

Fran{\c{c}}ois Portet and

Solange Rossato and

Fabien Ringeval and

Didier Schwab and

Laurent Besacier},

title = {Task Agnostic and Task Specific Self-Supervised Learning from Speech

with LeBenchmark},

booktitle = {Thirty-fifth Conference on Neural Information Processing Systems (NeurIPS)},

year = {2021}

}